Blog

December 11, 2025

Onboarding Your AI: how to roll out learning software like Solid in the real world

Yoni Leitersdorf

CEO & Co-Founder

Rolling out AI that learns your data is less about switching on features and more about teaching a new teammate, together.

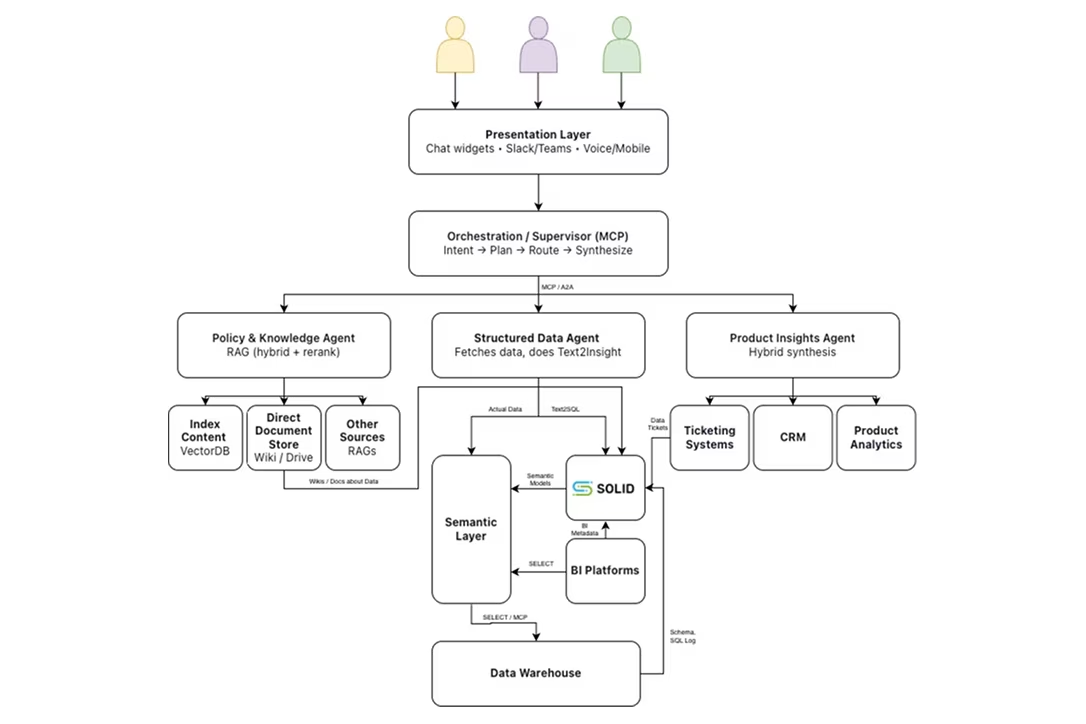

Imagine a new senior analyst joining your company. They are smart. They have seen a lot of different data stacks. They know SQL, modern BI tools, and even your industry. On day one, they still know almost nothing about your business. You do not measure their success after a 2 hour orientation and a couple of docs. You expect a ramp up: first week, first month, first quarter. You give them access to your tools, let them shadow people, and you watch how quickly they start making good decisions with your data. That is exactly how you should think about rolling out an AI-based technology like Solid. Solid is a piece of software, but it behaves much more like a new team member than like a static SaaS feature. It has to learn your environment. It will be wrong sometimes. It will get better. And the way you structure that learning journey is what makes the POC and rollout work or fail. In this post I want to talk about that journey. How Solid learns your world When we plug Solid into an organization, it does not start from a blank screen. It starts reading. A lot. Solid automatically pulls in things like:

- Your data warehouse schema

- SQL query logs

- BI models, dashboards and how they are used

- Data documentation and wikis (most organizations have very limited such documentation, and that’s fine)

- JIRA tickets that talk about data issues or definitions

- Your public website and help center

- And usually a few more odd places where context is hiding

If you read Your Data’s Diary: Why AI Needs to Read It, you have seen this idea before. Your data stack is already full of tiny breadcrumbs that explain how the business actually thinks. Solid treats those as training material.From that, the platform can usually get to something like 70 percent understanding on its own:

- It can map core entities and tables

- It can understand how business concepts manifest themselves in the data

- It can see which dashboards actually matter

- It can infer common metrics and how they are calculated

That initial pass is fast. It is also imperfect. The last 30 percent is where the real journey starts. The 70 percent rule (and why it is a feature, not a bug) Traditional SaaS trained us to think in binaries:

- Does the feature work or not?

- Is the integration live or not?

- Is the dashboard correct or not?

With learning software like Solid, that mental model breaks. If you wait for “100 percent accuracy” before you let anyone touch the system, you will never ship. At the same time, if you treat every wrong answer as a catastrophic bug, your team will lose trust before the AI even gets a chance to learn. In The Ghost in the Machine: How Solid drastically accelerates semantic model generation, we showed how much of the semantic model can be auto generated if you give the AI enough signals. But even there, the goal is not perfection out of the box. The goal is to shortcut the boring 70 percent so that humans can focus on the nuanced 30 percent. That 30 percent is made of things like:

- Subtle business rules that only live in someone’s head

- Edge cases in how a metric is defined for one region or one product line

- Legacy decisions that everyone hates but the business still relies on

- Political constraints about which numbers are “official”

No model can guess these reliably without you. Which brings us to the part most teams underestimate. The customer is part of the product loop now In the old SaaS world, the vendor built features, QA tested them, then threw them over the fence. You, as the customer, mostly validated integration and performance. With Solid, the customer is inside the learning loop. That is not a nice-to-have; it is the design. A typical journey looks something like this:

- Ingestion and auto-learning Solid connects to your stack, reads everything it can, and builds an initial semantic understanding.

- Expert pass from Solid’s team Our own data and product specialists review what the AI learned. We correct obvious mistakes, tune prompts, and add domain patterns we have seen across customers. This takes the model from “raw” to “respectable”.

- Guided “stump the AI” sessions with your team This is where it gets fun. Your analysts and business stakeholders start asking questions they truly care about. They try to break the system. When Solid gets something wrong, they mark it, correct it, and we treat that feedback as gold.

- Focused correction and pattern learning Every correction is not just a patch. It is a pattern. If we learn that “active customer” has a very specific meaning in your world, we propagate that everywhere. This is where our approach in AI for AI: how to make “chat with your data” attainable within 2025 kicks in: using AI to fix data for AI.

- Rollout to broader users When the core flows are stable, we expand to more use cases and more teams. Each new group brings new questions, which means more learning.

- Continuous refinement Data changes, business changes, and incentives change. So the model keeps learning. But at this point, you are improving an already useful teammate, not babysitting a toddler.

The key shift is this: your organization is now a co-teacher of the AI. If you treat feedback as “extra work,” you will resent the process. If you treat it as “we are teaching a very fast learner who will keep that knowledge forever and share it with everyone”, the investment makes sense. Redefining what “POC success” means Most POCs still look like classic software projects: a few weeks of integration, a checklist of features, and a final demo. For learning software, that is not enough. You are not just checking “does it run.” You are checking:

- Coverage – Did the system actually learn the important part of your world, not just a toy example?

- Accuracy and trust – When it answers, is it reliable enough that people would act on it?

- Learning velocity – When something is wrong, how quickly can you teach it the right behavior so it sticks?

Other posts