Blog

November 26, 2025

AI as an Operating System for High-Stakes Work: Lessons from Thomson Reuters’ CTO, Joel Hron

Yoni Leitersdorf

CEO & Co-Founder

Yoni, our CEO & Co-Founder, sat down with Joel Hron, CTO at Thomson Reuters, to talk about agents as an operating system, how to evaluate AI in high-stakes legal and tax workflows, and much more

I recently sat down with Joel Hron, CTO at Thomson Reuters, for an episode of Building with AI: Promises and Heartbreaks YouTubeSpotifyApple Podcasts). Joel leads product engineering and AI R&D across legal, tax, audit, trade, compliance and risk. In other words, his teams are putting AI into some of the most high-stakes workflows in the world.

This post is my attempt to capture the parts of our conversation that stuck with me most: how a 100+ year old brand thinks like an AI company, what it means to ship agents into courtrooms and tax departments, and why Joel thinks evals are getting harder, not easier.

Thomson Reuters was “doing AI before AI was cool”

Like many people, Joel’s first reaction when Thomson Reuters approached his previous company ThoughtTrace was: “The news company? They do software too?”

As he dug in, he realized:

- Thomson Reuters is one of the largest software providers to professionals in law, tax, audit, compliance and risk.

- TR Labs has been working on AI and machine learning for over 30 years, mainly around search and information retrieval.

- They shipped a semantic search application more than twenty years ago, even before Google popularized the concept.

The core job of Thomson Reuters products is to deliver:

- Authoritative content – case law, statutes, tax codes, guidance

- Expert interpretation – how professionals should apply that information in the real world

For decades, that meant building very good natural-language search and retrieval. When large language models arrived, they didn’t start from zero. They already had:

- Deep experience in NLP and information retrieval

- Battle-tested search systems

- Massive proprietary knowledge bases

- Thousands of in-house experts (they are one of the biggest employers of lawyers in the world)

That was the foundation for their current wave of generative AI products.

Step One: From research lab to shipping GenAI products

Timing-wise, Joel took over TR Labs right around the ChatGPT moment. Up to that point, Labs was closer to an applied research group. There was less experience in the mainstream product teams on how to apply AI in delivery.

So they pivoted hard:

- Took ~120 people in Labs and moved them closer to product roadmaps.

- Doubled the team to 250+ over the next two years.

- Focused them on building and shipping concrete GenAI capabilities, not just prototypes.

Most early use cases were classic RAG (retrieval-augmented generation)

“One of the core foundational components of good RAG systems is good search at the end of the day.”

Which is convenient if you’ve spent 30 years getting very good at search.

Joel shared two key product areas:

- Legal research assistants

- Use TR’s existing legal search engines as the retrieval layer.

- Focus not on the “easy 70%” of questions you could already get from Google, but on the nuanced, fringe questions that actually drive case strategy.

- Optimize retrieval for how the language model needs to see context, not just for humans reading a result page.

- Contract drafting assistants

- Take an existing clause (for example, a termination clause) and combine it with market standards and drafting notes.

- Use generative models plus TR’s expert content to propose a better clause or a full redline.

A recurring theme was this idea of “fringe value”: if the model is only good enough for the middle 70% of questions, it’s not actually differentiating you in a professional setting. The hard problems live on the edges.

Step Two: Re-learning evaluation from scratch

When you move from simple search or classification models to LLM-powered systems, your evaluation playbook basically falls apart.

The old world:

- Precision and recall

- “Did we retrieve the right document in the top 3?”

The new world:

- Multi-page, subjective answers

- Chains of tool calls and decisions you can’t easily see

- Output that’s “sort of right” but subtly wrong in important ways

Joel’s team had to design new eval rubrics around ideas like:

- Completeness

- Coherence

- Helpfulness

- Harmfulness

- Factuality

They tried using LLMs as judges, like everyone else. It helped, but not enough to ship products that lawyers, courts and government agencies would rely on. So they leaned into their unfair advantage: thousands of domain experts.

- They trained editorial and subject-matter experts on how to score AI answers against these new rubrics.

- They built internal benchmarks with TR’s own data and questions.

- They use automated / LLM-based evals to speed up inner development loops, but final “go live” decisions still go through human eval

I really liked one principle Joel shared: if you can fully remove humans from the loop, you might not be working on a hard enough problem.

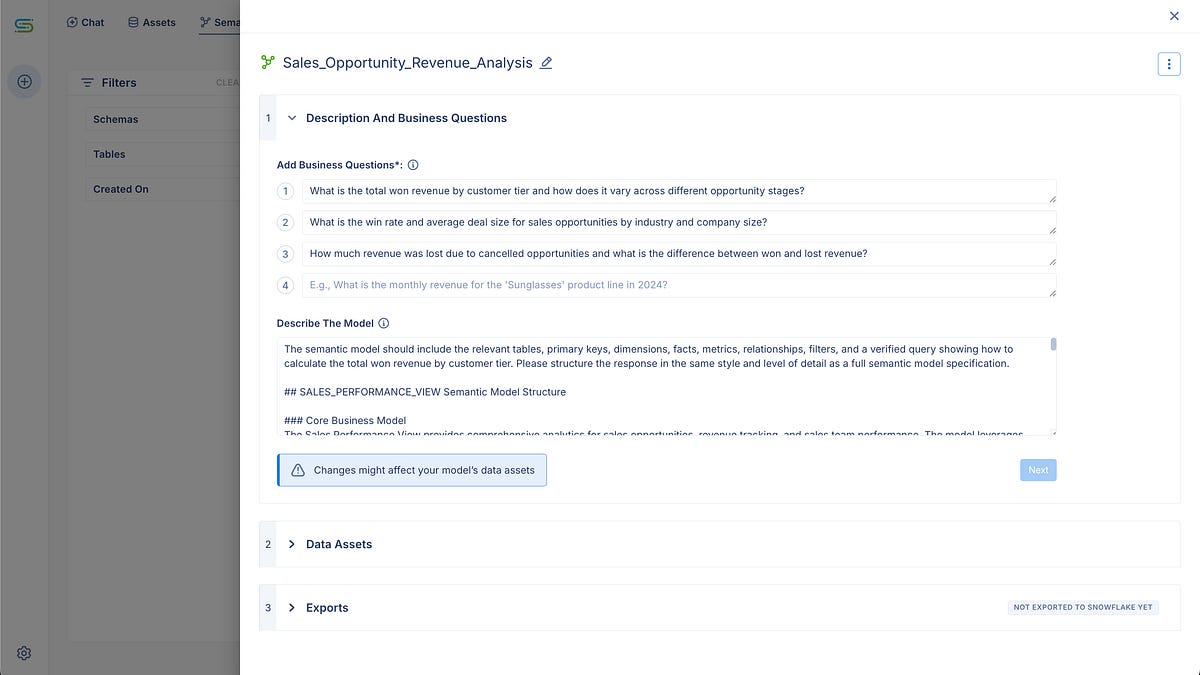

That resonated a lot with how we think about analysts at Solid. It also echoes a theme from Stop saying “Garbage In, Garbage Out”, no one cares where I wrote about how messy reality always forces some human judgment back into the loop.

Setting expectations in high-stakes workflows

When your users are lawyers, judges, tax professionals and regulators, “move fast and break things” is not a helpful motto.

We talked about how Joel thinks about expectation alignment

- Education during sales and onboarding about what the system can and cannot do.

- Clear in-product UX that signals limitations and nudges users towards validation.

- Very strong intent resolution and guardrails so the system only attempts to answer questions in domains where it can be safe and grounded.

Interestingly, TR leaned into providing long, comprehensive answers early on. While many systems focused on short responses, their legal research assistant was happy to give you a multi-page analysis.

The point is not to replace the lawyer. It is to:

- Give them a strong starting point.

- Provide both sides of an argument where relevant.

- Deep-link every citation into Westlaw, their flagship research platform, so users can click through and run their own checks.

If there is no link for a cited case, it’s a strong signal something is off. The UX itself is part of the quality and trust story.

This is a nice counterpoint to the broader internet debate of “AI answers vs sending traffic”. In TR’s world, sending the user back into the source system is exactly the right thing to do.

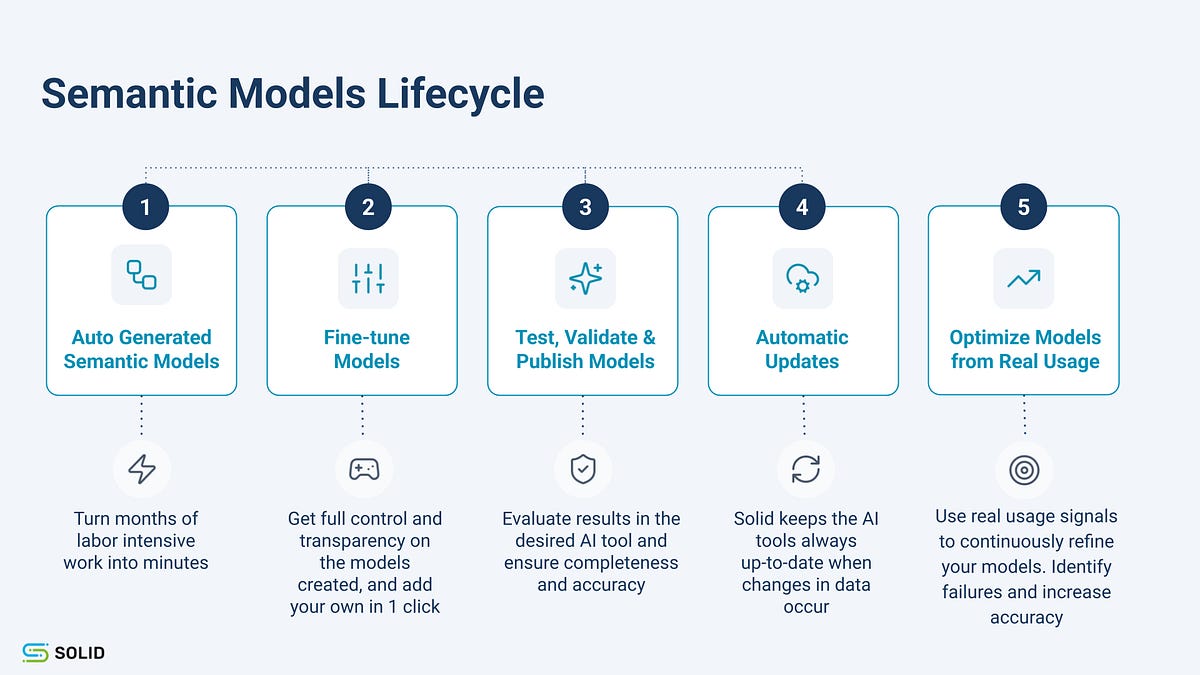

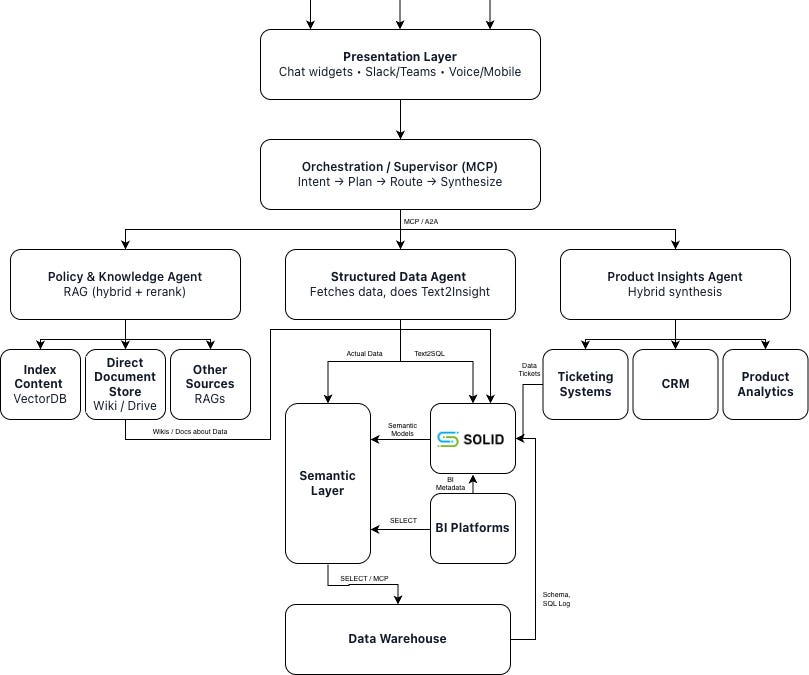

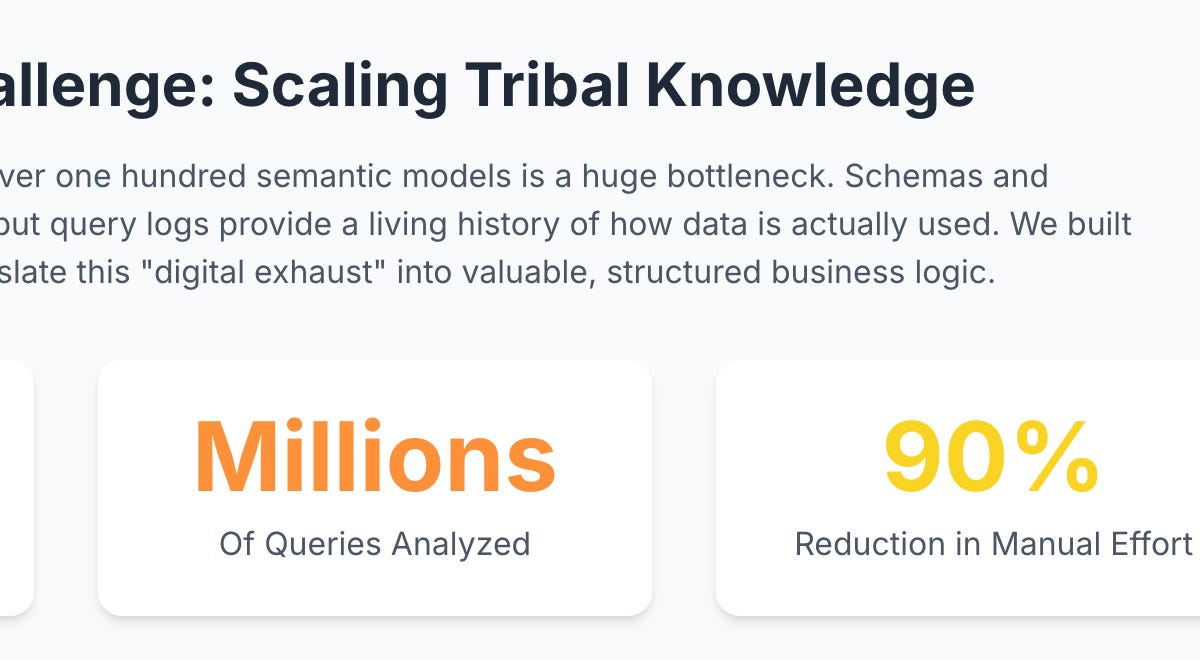

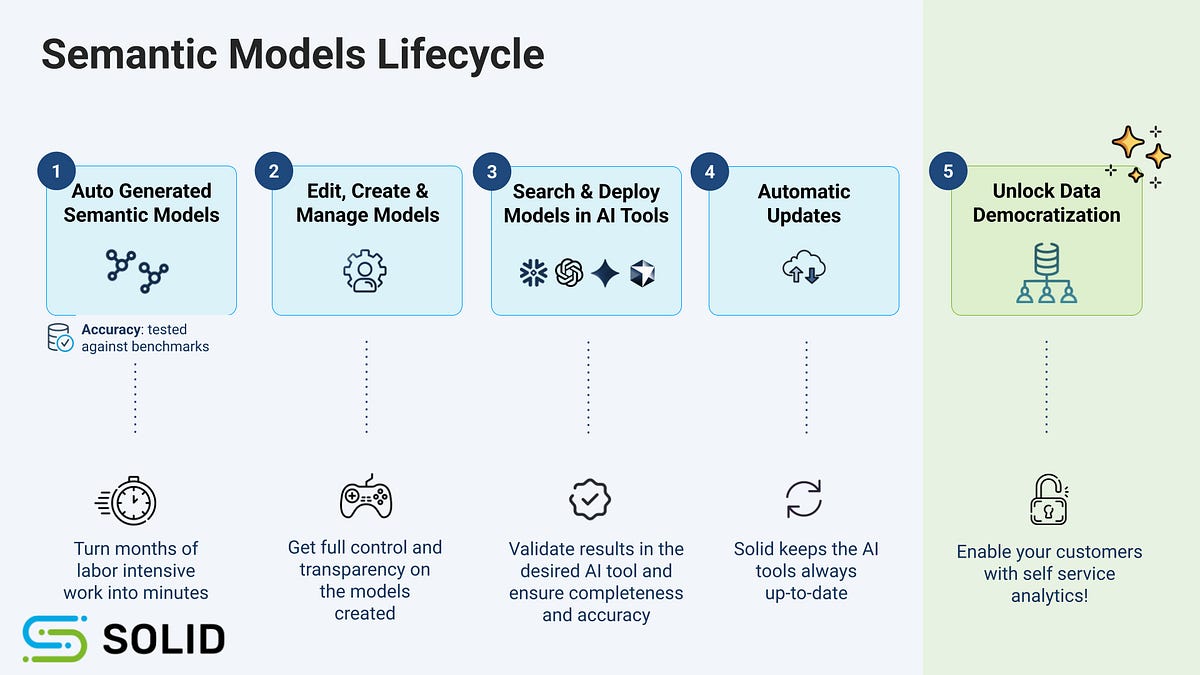

If you read Behind the scenes: how we think about semantic model generation, you’ll see a similar pattern: summaries and automation are powerful, but they always point back to the human-owned source of truth.

Agents: AI as an operating system

One of my favorite parts of the conversation was how Joel described agents:

“LLMs now seem like they are experts at operating a computer. Full stop.”

Instead of thinking about an all-knowing, general intelligence that contains everything, he sees the current trajectory as:

- Models that are very good at tool use

- Systems that can plan, call APIs, navigate file systems and applications

- An “AI operating system” that orchestrates a growing toolbox

For Thomson Reuters, that maps very nicely onto their product universe:

- ~40+ tier-one products

- 100+ products overall

- Each with domain-specific features built over decades, like legal citators, tax calculators, audit prep checklists and so on

The strategy going into 2026:

- Take those existing capabilities and re-expose them as agent tools

- Not just “wrap an API and call it a day”, but actually design tools with the ergonomics an LLM needs: clear inputs, predictable outputs, good descriptions.

- Let agents use products like Westlaw the way a human would: follow citation trails, run secondary searches, check references, then synthesize a report.

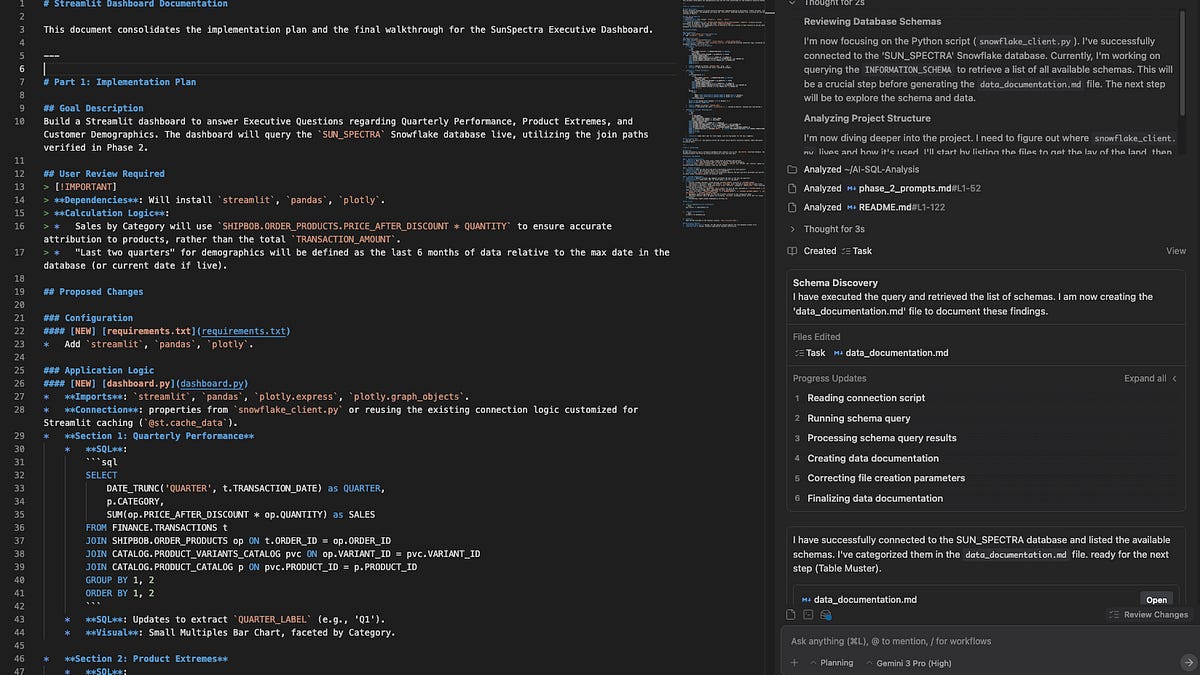

They already launched a “deep research” capability for Westlaw where:

- The agent uses legal research tools as building blocks.

- It can follow breadcrumb trails, validate its own citations and explore a space of arguments.

- Customers are telling them these reports look like work that would historically take “a hundred hours for a team of lawyers”, delivered in minutes.

Is it perfect? No. But it moves the AI assistant from “paralegal prototype” closer to “junior associate who never sleeps”.

If you listened to our earlier episode with Meenal Iyer and read Building an AI-powered Intelligent Enterprise: How a Data Leader Steers Her Team Through the AI Journey, you’ll recognize the same pattern: start with foundations, then let AI ride on top as a multiplier.

Why they stopped thinking in “MVPs”

There was a small point Joel made that I think many teams building with AI need to hear.

In the early days, they scoped their GenAI products the way you would scope a normal feature:

- Find a narrow, high-value use case.

- Build a minimal viable product.

- Expand from there.

That worked for a few months at a time, and then the ground shifted:

- Context windows exploded, so you could do contract-level reasoning where you previously only dared touch a clause.

- New models improved at reasoning and tool use, making whole architectures obsolete.

If you build for “what the model can do today” in a very narrow slice, there is a high chance you have to rebuild the whole thing in six months.

The adjustment they made:

- Aim wider from the start. Solve more of the end-to-end workflow.

- Then discover where the real hard parts are, and invest there.

- Assume the models will get better at many of the “easy” steps in the middle.

The hard problems ahead

So what keeps Joel up at night when he thinks about 2026?

1. Evals are getting harder

- Outputs are longer and more complex.

- Agent traces have many non-deterministic steps.

- It’s no longer obvious which component to tweak to improve end-to-end quality.

Debugging looks less like tuning a single model and more like debugging a distributed system with a slightly moody colleague in the middle.

2. Latency and “test-time compute”

Users do not always want to wait 20 minutes for a perfect answer. Sometimes they just need to stay in flow:

- When is it worth spending more compute on deeper reasoning?

- When should you stop early and ask the user a clarification question?

Balancing that for each use case is still more art than science.

3. UX for long-running, human-in-the-loop systems

If you’ve used tools like Claude Code or similar agents inside your IDE, you know this feeling:

- The best engineers use these tools as collaborators.

- They provide a plan, guide decisions, correct course and check edge cases.

- The worst experience is when the agent runs off on a tangent and you have no good way to steer it.

Joel expects the same thing in law, tax and audit:

- You need interfaces where professionals can see what the agent is doing.

- They should be able to intervene, redirect, approve or stop it.

- That UX is still very much an unsolved problem.

“This is AGI” – and why it’s not

At the end of the conversation, I asked Joel for an AI myth he’d bust in one sentence.

His answer:

“This is AGI. That’s the method bus.”

He doesn’t think we are at, or even particularly close to, AGI in the sense of a general, human-level intelligence. What we have instead are very capable systems for:

- Operating computers

- Reasoning over tools and APIs

- Extending themselves through the software and data we already have

Commercially, that might actually be more interesting. Especially if you are Thomson Reuters and your “toolbox” happens to include most of the world’s legal and tax workflows.

My takeaway

For me, this conversation with Joel crystallized a few things I see across many of our customers:

- The winners treat AI as an operating system for their existing strengths, not a replacement.

- High-stakes domains can and should move fast, but only with serious evals and human experts in the loop

- Narrow MVP thinking breaks when the underlying AI stack changes every quarter. Designing around end-to-end workflows is a better bet.

If you’re building anything that sits between AI and critical decisions, I’d strongly recommend listening to the full episode with Joel (see links at the top of this post) And if you want a contrasting view from the data-leader side, check out our recap of the episode with Meenal Iyer in Building an AI-powered Intelligent Enterprise

In the meantime, I’ll keep asking guests the same closing question I asked Joel:

If AI in the next five years is over-hyped in the short term, but under-estimated in the long term, what does that mean for what you decide to build today

Other posts