Blog

January 21, 2026

From Black Box to Glass Box: the only sane way to run AI on your data

Yoni Leitersdorf

CEO & Co-Founder

Most “chat with your data” and agent+data tools are Black Boxes: they hide how they use your warehouse, how they analyze results, and why they take certain actions. At Solid, we believe that's bonkers

Everyone wants AI on their data. Almost no one trusts it.

Two waves are hitting companies at once:

- Chat with your data on top of the warehouse or BI

- “Agents with your data” that don’t just answer questions, they update CRM, tweak campaigns, move money

From the outside: magical.

From the inside, if you own the data: risky.

You don’t really know:

- Which tables and columns the model is using

- Why it picked this query instead of another

- How it analyzed the results before answering or taking action

And you know you’ll be held responsible if it’s wrong.

That’s what happens when the AI+data layer is a Black Box

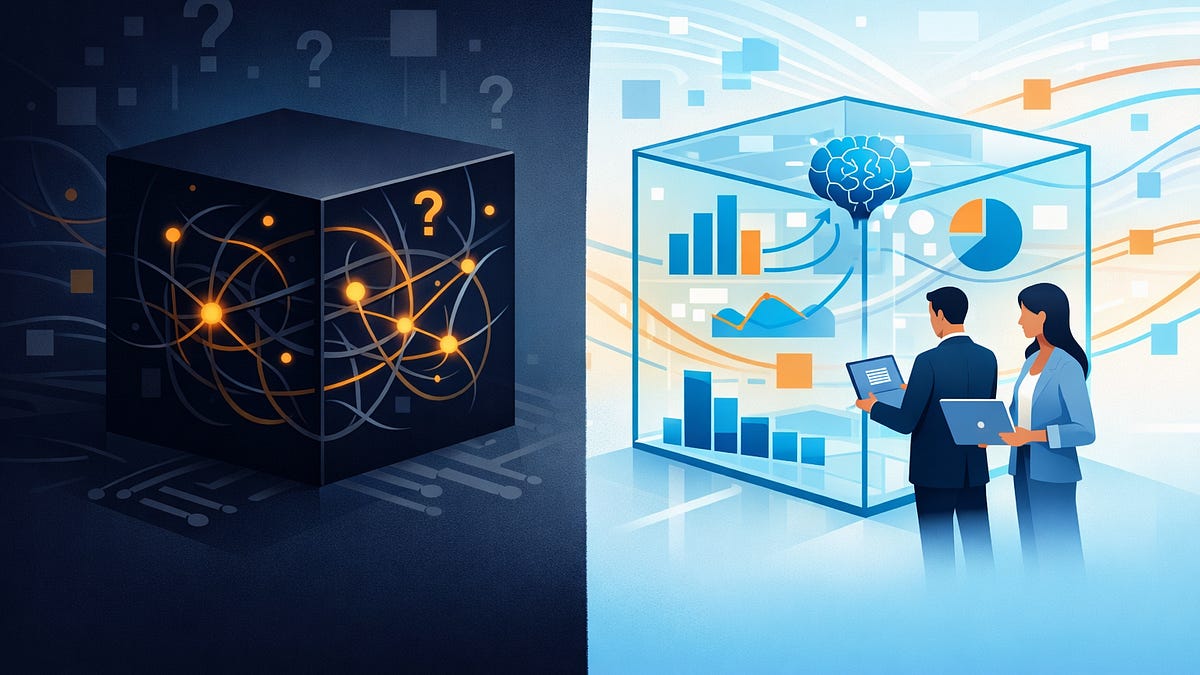

Black Box vs Glass Box, for chat and agents

This isn’t just about “explainable models.” It’s about whether the system that combines models with your warehouse is understandable and controllable.

What Black Box looks like

Typical pattern:

- Connect a tool to your warehouse

- Point it at your schema

- Start asking questions or giving tasks to agents

You might see the generated SQL or API calls. But you don’t really control or observe:

- What’s fair game

Which schemas, tables, columns and metrics it considers “ok to use.” - Why it chose that query

Why it joined on that key, used that grain, or picked that time window. - How it analyzed results

Which segments it compared, what thresholds it used, how it turned rows into “insights.” - What it’s allowed to say or do

When it must refuse, when it should escalate, and which actions are off limits.

The “reasoning and policy layer” is invisible. If that’s opaque, you have a Black Box, even if you can see the SQL.

What Glass Box should look like

A Glass Box starts from a simple premise:

The people who own the data should also own the rules for how AI can use it.

Concretely:

- Control over inputs

Data teams decide which schemas, tables, columns, entities and metrics exist in the AI’s universe. - Control over reasoning

Clear mappings from business concepts to joins and metrics, and editable rules for how common questions are interpreted. - Control over policy

Guardrails for what the AI can answer or do, when to say “I don’t know,” and when to route to a human. - Visibility into traces

For every answer or agent run, you can inspect the data accessed, rules applied, and path taken.

That’s the minimum bar if you want AI touching real customers and real dollars.

Why Black Box breaks in production

Black Box might be fine for summarizing PDFs. Structured data and agents are different.

- Silent misunderstandings

The model picks a “close enough” table or metric. The number looks plausible, until it’s in a board deck or powering an agent that changes a deal. - Opaque analysis

You see the SQL, but not why certain segments, thresholds, or outliers were chosen. The important logic lives above the query layer. - Governance risk

Tools blur the line between what’s technically accessible and what’s allowed. Sensitive combos of fields, or actions based on context that shouldn’t have been used, slip through. - Loss of trust

If leadership asks “Why did we get this answer?” or “Why did the agent do that?” and the real answer is “We’re not sure,” you won’t get production sign-off.

Black Box AI demos well. It dies in governance review.

Glass Box first. Context graph later.

There’s a lot of hype around “context graphs” and “systems of agents.” Underneath the jargon, there’s a simple idea:

- It’s not enough to know what the current state of the business is.

- You also want to understand how and why key decisions were made over time.

If you do Glass Box right, you get the ingredients for this “context graph” almost for free:

- Every answer and agent run comes with a trace:

- Which entities and metrics were used

- Which rules and guardrails fired

- Where humans stepped in

- Over time, those traces connect your core business objects (accounts, tickets, policies, approvals) with the decisions that changed them.

You don’t have to sell this internally as “let’s build a context graph.”

You sell it as:

- “Let’s make our AI legible and auditable now”

- …and get a rich history of decisions that future agents and analytics can learn from later

Glass Box is the thing you must do.

The “context graph” is what naturally emerges if you instrument it well.

The catch: Glass Box sounds slow and manual

Most leaders nod along with all of this – then immediately worry about cost and speed.

Hand-crafting every metric, join, rule and guardrail for AI sounds like:

- Rebuilding your semantic layer from scratch

- A multiquarter project that never leaves pilot

This is why Black Box tools are attractive: connect warehouse, get a demo next week.

It feels like a tradeoff:

- Black Box:fast but risky

- Glass Box: safe but painfully slow

We think that’s a false choice.

You should be able to have your Glass Box and move fast.

The AI should help you build the Glass Box.

Solid’s theory: accelerate the Glass Box, don’t avoid it

At Solid, our bet is simple:

The only sane way to run AI on your data is as a Glass Box – and the only way to make that practical is to automate most of the Glass Box creation while keeping data teams in control.

In practice, that means:

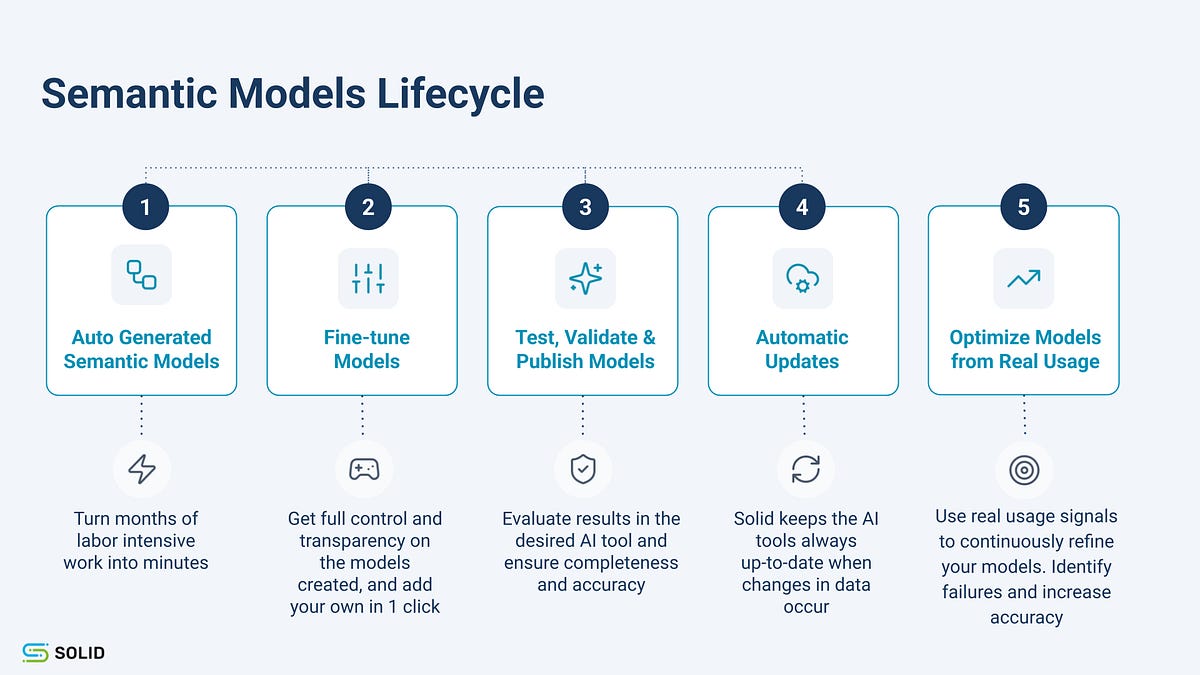

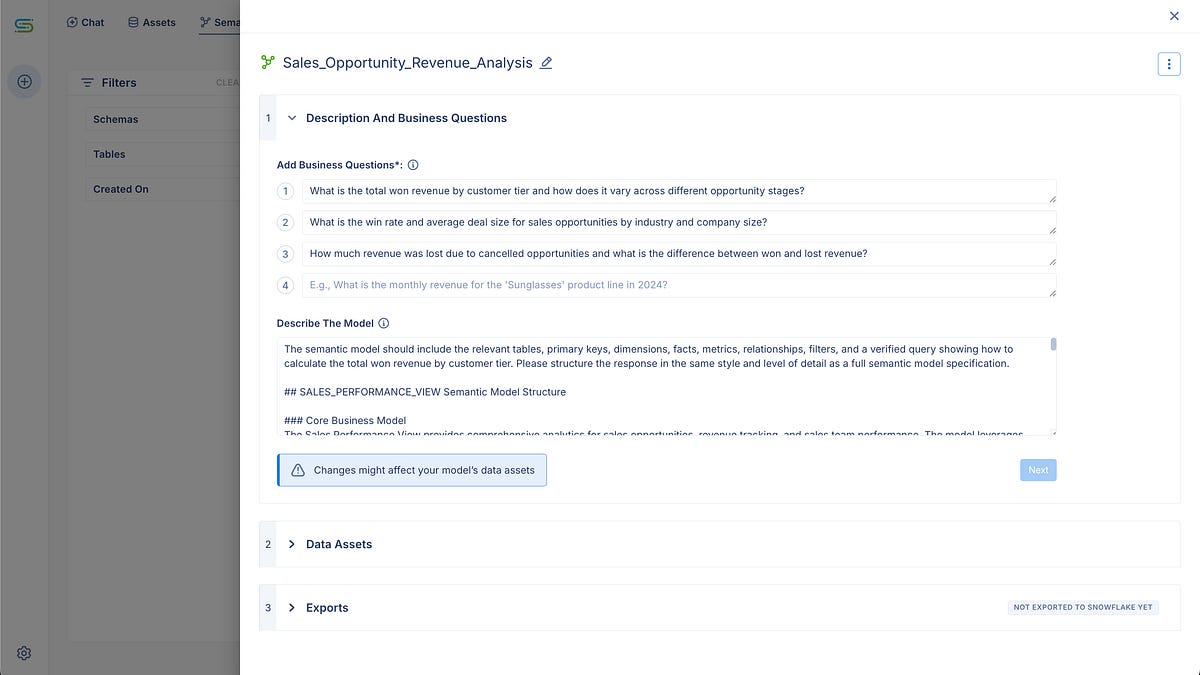

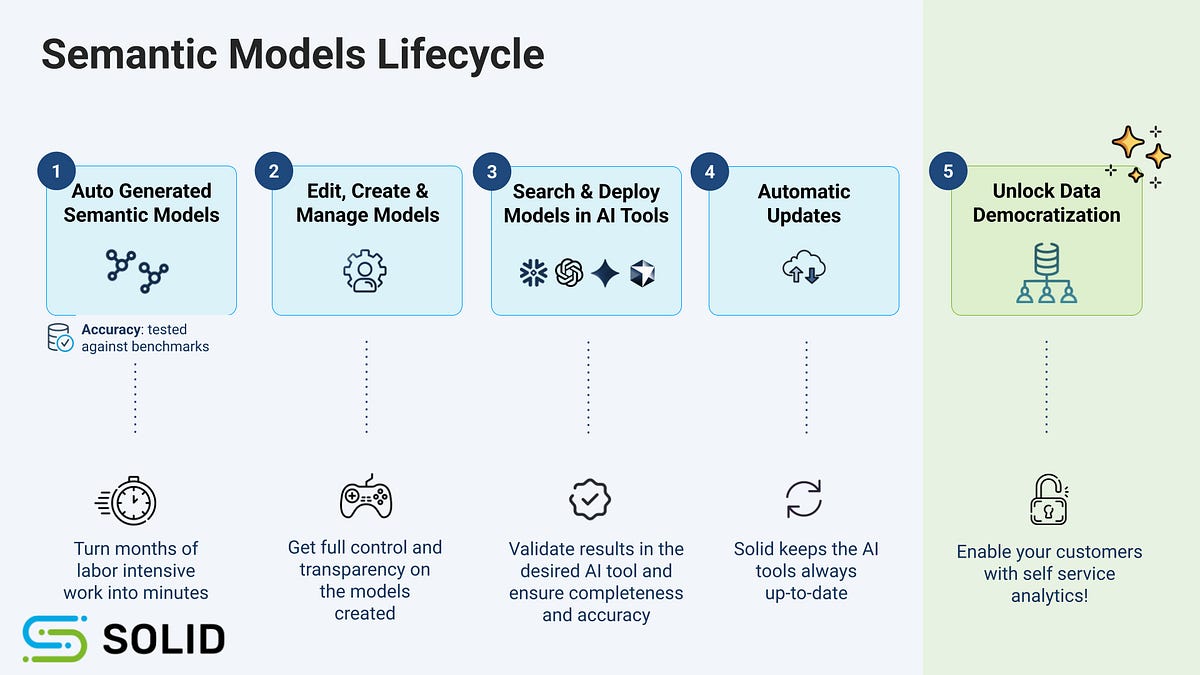

1. Autogenerate, then curate

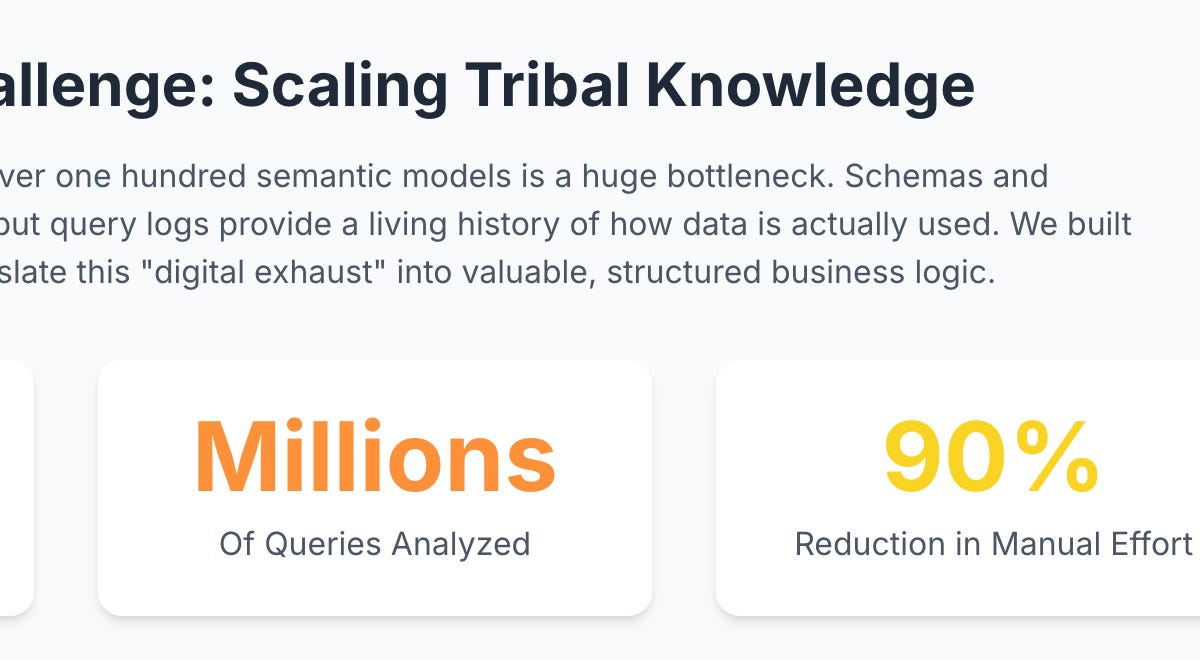

Your warehouse already contains a lot of structure: schemas, models, usage patterns, documentation.

Instead of asking data teams to author everything by hand, we use AI to:

- Infer likely entities, metrics and relationships

- Propose initial rules and guardrails around them

Data teams review and correct, instead of starting from zero. Control stays with humans; effort drops dramatically.

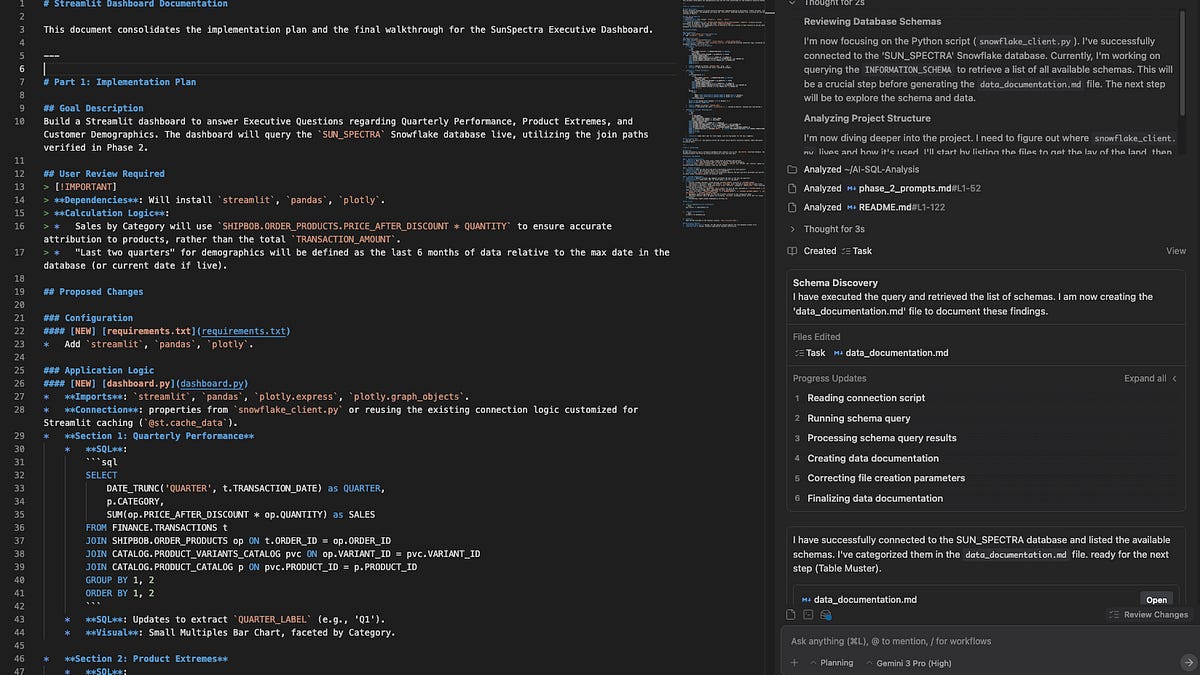

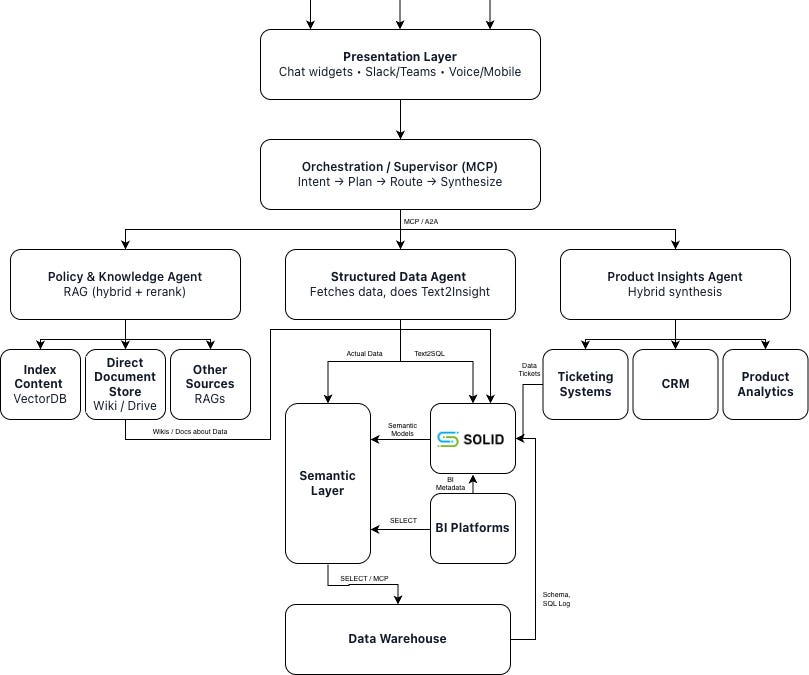

2. One Glass Box for chat and agents

No separate universes for:

- “Chat with your data”

- Agentic workflows that take action

Both run on the same governed layer of entities, metrics, rules and policies. Fix a misinterpretation once, and everything gets safer at the same time.

3. Decision traces as a built-in byproduct

Every AI answer and agent run produces a trace:

- Data accessed

- Rules invoked

- Guardrails triggered

- Human approvals

That’s your path to a useful “context graph” over time – without a massive upfront modeling project or a new buzzword program to sell internally.

A quick “Glass Box or not?” checklist

When you evaluate “AI on your data” tools, ask:

- Can my data team choose and update what schemas, tables and columns exist in the AI’s world?

- Can we define business concepts and metrics once and have those govern all AI behavior?

- Can we encode what the AI is not allowed to answer or do as policy, not just prompts?

- For any answer or agent run, can we see the full trace of data, rules and decisions?

- Does the system help us build and maintain this Glass Box automatically, or does it rely on manual configuration?

If not, you’re not getting a Glass Box.

You’re getting a slightly translucent Black Box on top of your most important asset.

Where this is going

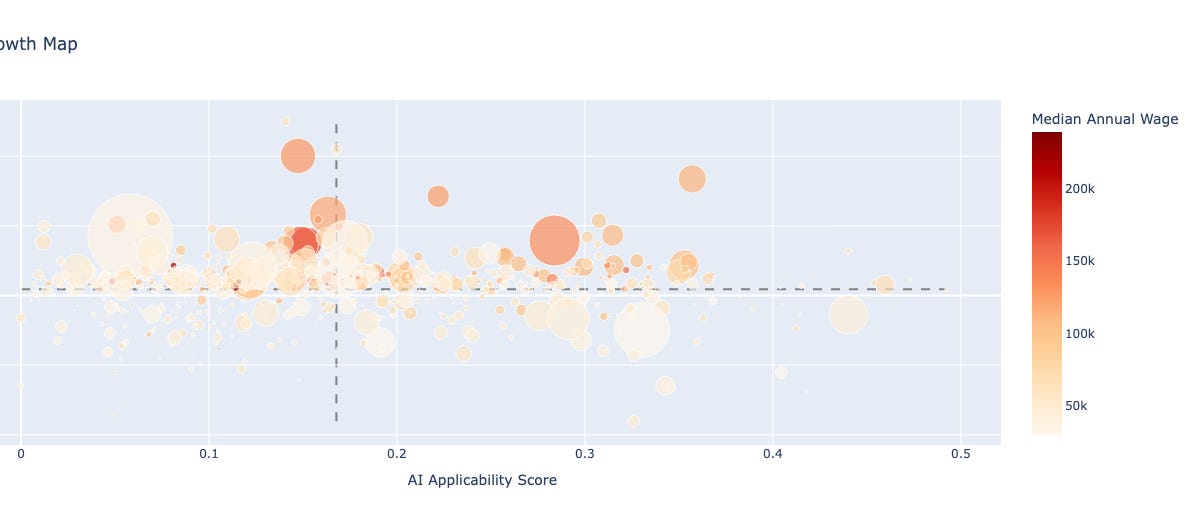

Systems of record aren’t going away. Systems of agents are arriving fast.

The real leverage is in the layer that connects the two in a way that’s understandable and controllable.

For AI on structured data, that means:

- Glass Box by design

- Decision traces by default

- Acceleration from AI, not avoidance of governance

That’s the path we’re building toward at Solid.

Other posts