Blog

December 19, 2025

From Spaghetti to Digital Companions: How HiBob’s Reut Einav Rebuilt Data for the AI Era

Yoni Leitersdorf

CEO & Co-Founder

Yoni, our CEO, sat down with HiBob’s VP of Data, Reut Einav, to talk about migrating an entire data foundation in six months, empowering 2,000 people to build GPTs, and why “No AI can fix bad data."

I recently recorded a Building with AI: Promises and Heartbreaks episode with Reut Einav, VP of Data at HiBob. You can listen to it on YouTube here

HiBob (or “Bob”) is a fast-growing HR and payroll platform that now also stretches into finance, helping mid-sized companies manage everything from HRIS and engagement to native payroll and FP&A in one system.

Reut joined in December 2024 as VP of Data, after senior data roles at Amdocs and Yotpo.

Her mandate, in her own words:

“I brought in to fix the data, which is a very big statement.”

What followed was a six-month sprint where her team rebuilt HiBob’s entire analytical foundation, kept the business running, and used AI agents as part of the migration itself. In parallel, HiBob’s AI Mind program helped 2,000 employees create thousands of GPTs that are now turning into production “digital companions.”

This post is my attempt to distill that story and connect it to what we talk about a lot here at Solid: semantic layers, “chat with your data,” and getting real AI into production, not just decks.

Step One: “Fix the data” starts with mapping the spaghetti

When Reut arrived, HiBob was ~2,000 people and ten years into its journey. The company had grown quickly, expanded from core HR into payroll and now into the CFO’s wallet with finance capabilities.

Like many scale-ups, they already had functioning data:

- multiple databases

- several BI tools

- local spreadsheets and models owned by teams

- years of organic dashboards and logic

Her first month was not about technology choices. It was about archaeology.

She and her team:

- mapped existing assets and lineage

- grouped them into business areas and processes

- reverse-engineered how the business actually made decisions

As she put it, everything was “scattered all over the round between databases, between BI tools, between local assets that people held,” and the first big task was finding patterns in “this pile of, again, amazing assets.”

Very quickly, it became clear that a light refactor would not cut it. To prepare for AI and the next decade, they would need to rebuild the whole analytical infrastructure, while keeping every existing report and process alive.

In other words: re-lay the foundation while everyone is still living in the building.

Step Two: Rebuild while the lights stay on

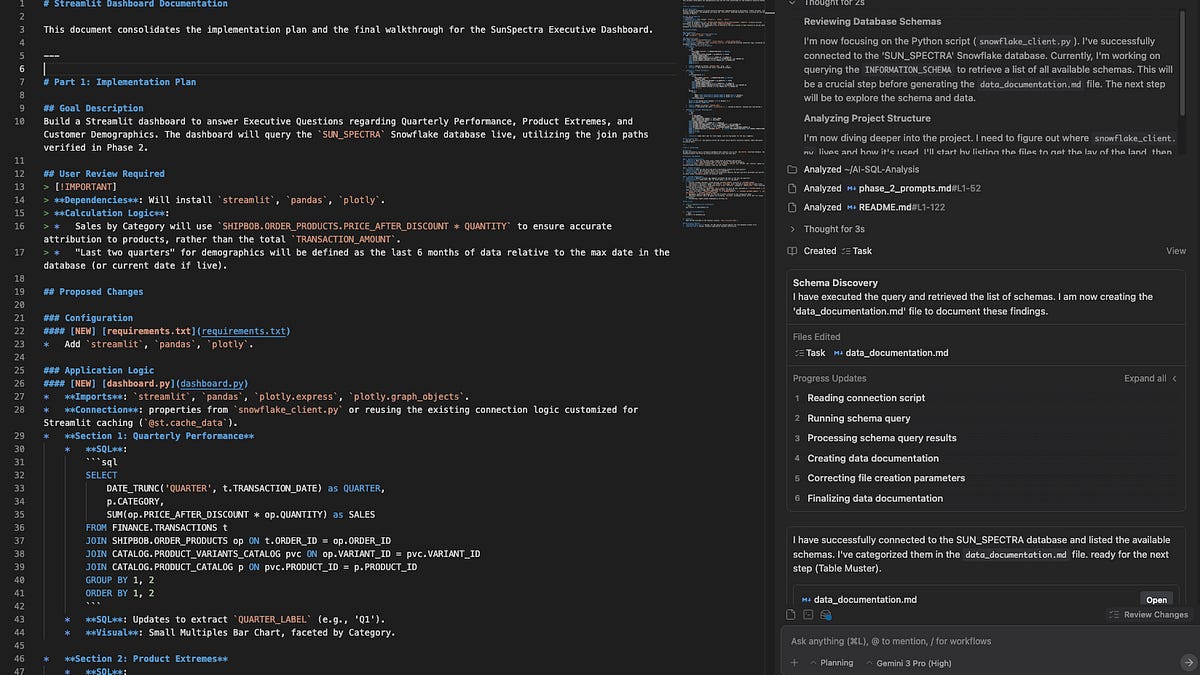

Technically, the trigger for the brutal timeline was a BI migration: they had to move from a server-based deployment to the cloud, which gave them a hard cutoff date.

But the deeper driver was value. As Reut said, if the migration dragged on:

- business users would see “no impact and value”

- the data org would burn political capital and budget

- she might not even get to a second year in the role

So they chose a four-to-six-month window. That is aggressive for any full-stack data migration, let alone at HiBob’s scale.

To make it real, they:

- Doubled down the team.

HiBob invested in contractors and rebuilt the internal data team around the new mission. - Used AI to understand the mess.

They leaned on AI to parse schemas, code, and dashboards to get a “very clear understanding of all of this spaghetti” and map it into business-aligned domains. - Ran two environments in parallel.

The existing stack kept serving the business. In parallel, they built a new environment with Snowflake, dbt, Fivetran and friends, then carefully migrated use cases, and finally shut off the old world.

If you’ve read our post on “Semantic layer for AI: let’s not make the same mistakes we did with data catalogs,” you’ll recognize this pattern: strong modeling, clear semantics, and ruthless scope discipline instead of trying to boil the ocean.

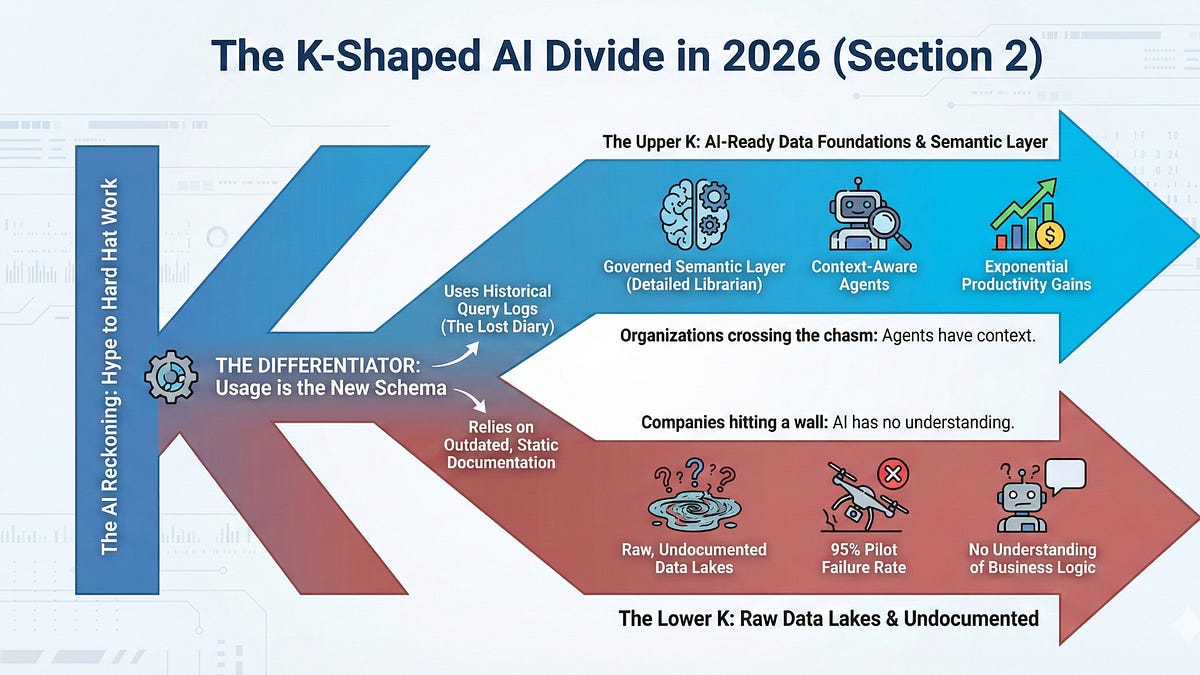

Step Three: Back to basics, for AI

One thing I loved about this conversation is how “AI ready” for Reut does not start with models. It starts with fundamentals.

She described it like this:

- Before BI and self-service, many of us lived in cube land. We had very explicit schema, relationships, and controlled reporting.

- The last decade shifted to democratized BI, where logic moved into reports, LookML, dashboards, team-owned assets.

- That created freedom, but also scattered semantics.

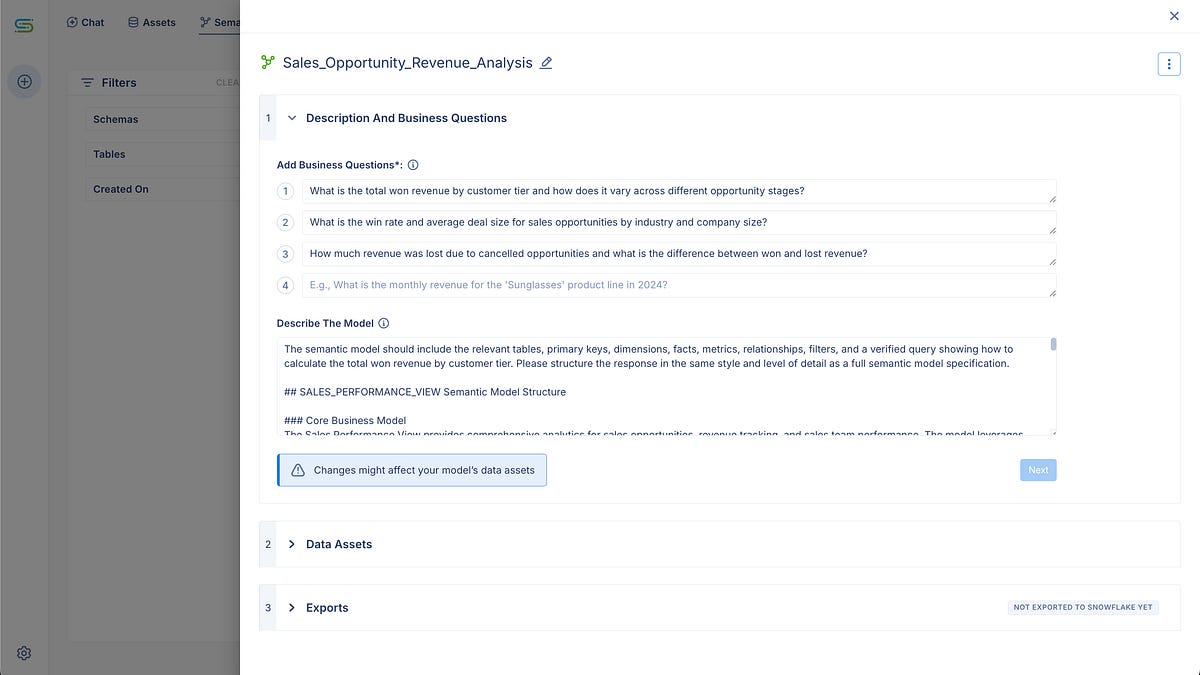

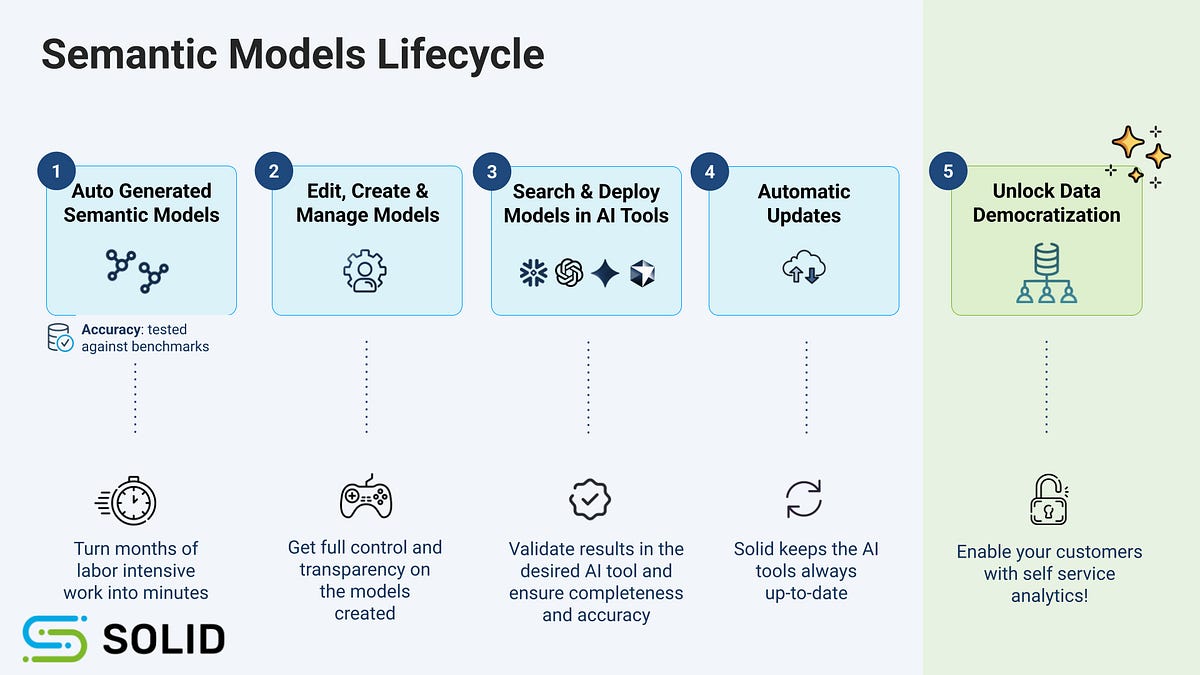

So she “went back to the basics,” because for AI you need one semantic brain:

- one place where business concepts live

- one source of truth that both agents and dashboards can rely on

- one layer that powers “reverse ETL, ‘chat with your data’, dashboards, services” all reading from the same definitions

This is very aligned with what we’ve been writing here:

- In The Two Souls of a Semantic Layer, we talked about the tension between governance and speed.

- In Stop saying “Garbage In, Garbage Out”, no one cares, we argued that slogans about data quality are not enough; you need opinionated, production-grade models.

HiBob made that investment, at high speed.

Step Four: Give the business a playground, not a black box

Once the new foundation was in place, they flipped the experience for business users.

From the outside, nothing “broke.” But the capabilities changed dramatically:

- A robust analytical model now underpins things like account funnel, conversion from MQL to SQL, ARR metrics, and partners.

- Time-to-market for new slices and populations dropped sharply, since new needs plug into the same model instead of bespoke pipelines.

- They migrated to Tableau Cloud, with Pulse as their metric store and a marketplace of certified datasets for self-service.

Business users can now:

- drag and drop from certified datasets into their own views

- rely on a “freedom in a box” model, where they can explore safely without breaking core definitions

- use Copilot capabilities inside Tableau to build content on top of the shared semantics

On top of that, HiBob connects Snowflake to Cortex so people can “talk to your data.” If something is modeled and in the semantic layer, you can ask it questions.

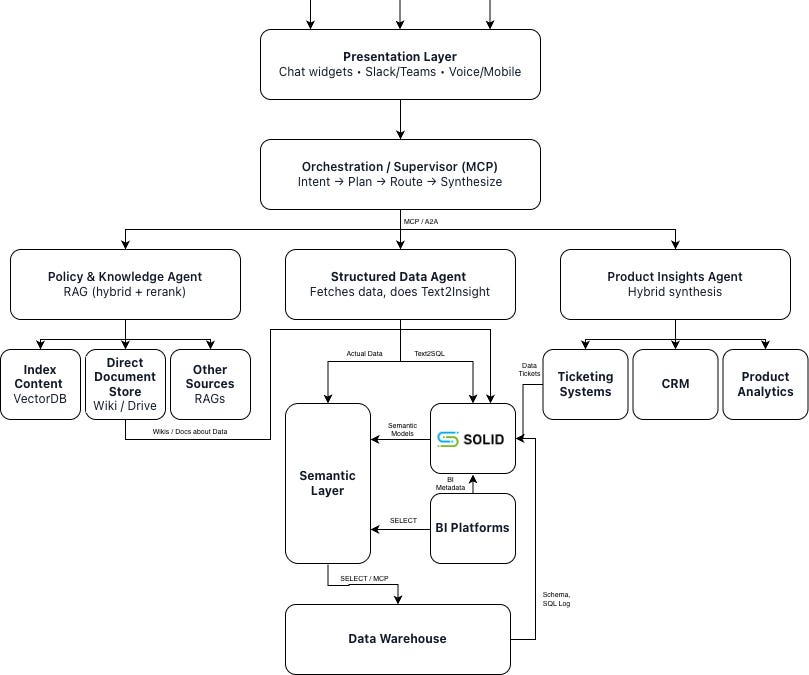

Reut and her team are now designing an agent architecture where:

- a 360-degree “super-agent” sees across all domains

- domain-specific sub-agents handle marketing, sales, CS, finance, etc

- each agent has personas tuned to different user types

And importantly, they want to marry conversational and visual experiences. As she said, a chart inside a chat window is nice, but most users still want to filter, slice, and explore.

If you’re curious how we think about this from the product side, I wrote about some of the patterns in AI for AI: how to make “chat with your data” attainable within 2025

Step Five: AI Mind and 2,500 GPTs as a flywheel

All of this would already be a big story. But HiBob did not stop at BI or analytics.

Parallel to Reut’s work, the company has been running an internal AI program called AI Mind, led by a dedicated team in Business Technologies.

Executives gave it real sponsorship:

- AI days across the company

- broad access to ChatGPT, Gemini and others

- internal AI leaders and “AI champions” in each business unit

- structured training and celebrations around wins

As Reut described it, “from everywhere you went,” the message was clear: AI is part of your job.

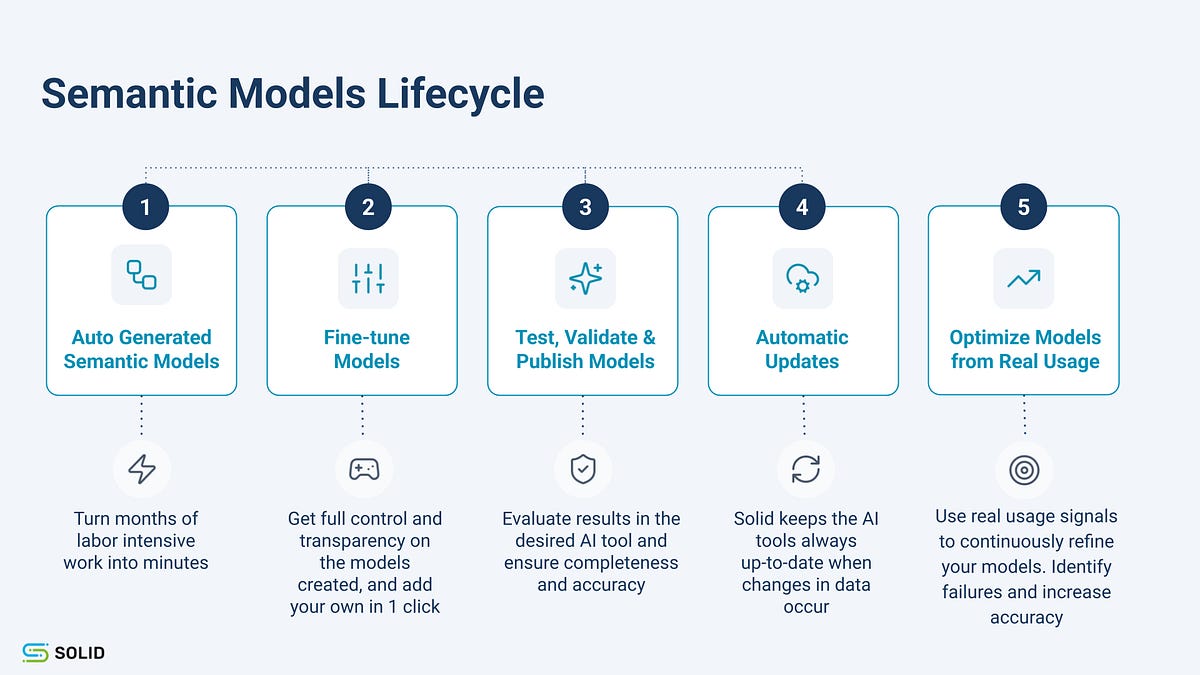

Employees started by building thousands of custom GPTs for their own pains: meeting prep, upsell ideas, content, process assistants, and more. The AI Mind team then introduced a five-step framework to turn GPTs into full agents or “digital companions,” consistent with the OpenAI case study about HiBob.

A few key parts of that framework:

- Idea and proof of concept. Employees propose GPTs rooted in real pain points.

- Build. The AI Mind team and engineers help turn them into secure, compliant agents.

- Adoption & enablement. Documentation, training, and named owners.

- Maintenance. Feedback loops and performance reviews so agents do not rot.

- Scale. Successful agents are cataloged and reused as part of a growing internal directory.

As Reut emphasized, agents are now treated “just like an employee”: they need maintenance, versions, and performance reviews, and they require people to own their evolution.

This is very similar to what we see with Solid customers who go beyond experiments and actually operationalize AI. Tools are important. Culture, ownership, and structure are decisive.

Step Six: Capturing the new data AI creates

Most companies ask: “How do I make the data in my warehouse available to AI?”

Reut is already working on the flip side: how to capture and model the data created by AI and people using AI

She gave a simple example:

“Do you know how much data you can extract from a phone call with a customer or with a prospect?”

Transcripts, sentiment, product interest, objections, competitive mentions, outcomes. For years, that lived in call recordings and people’s heads. Now, agents can extract and structure it.

Same story for:

- PDFs

- screenshots of dashboards that can now be “read”

- free-form interactions inside agents or GPTs

Her 2026 roadmap (and she wisely only plans three months at a time) focuses on:

- keeping the data organization itself augmented with agents

- giving the business a one-stop shop where they can analyze, chat, and act on data

- modeling the new AI-generated data fast enough that it feeds back into the semantic layer

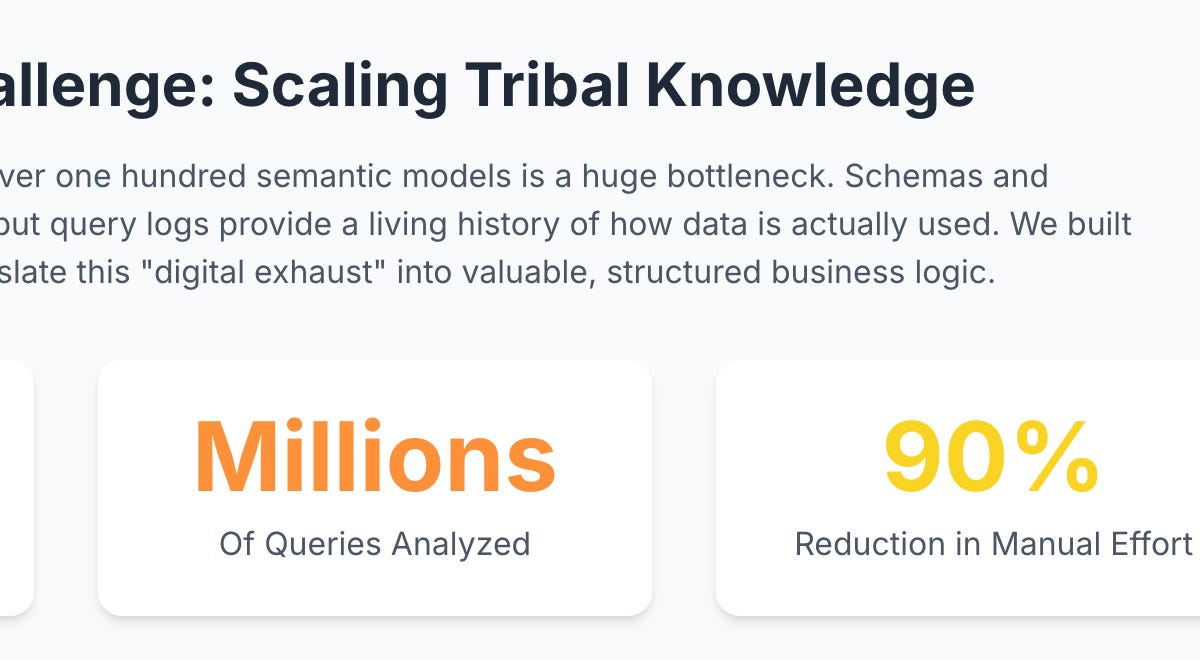

This resonates a lot with our post Your Data’s Diary: Why AI Needs to Read It. AI is not just another consumer of your warehouse. It is a massive producer of new exhaust you can learn from.

Step Seven: Agents as teammates, not toys

Towards the end of the episode, I asked Reut for a prediction about AI over the next five years. Her answer:

“I think that agents are going to be part of the teams and the org structures. I think that it’s inevitable.”

We also talked about a company I’d seen where agents already had their own Slack bots and were chatting with one another in a shared channel, like a team of colleagues.

At HiBob, that future is not theoretical. They are already:

- treating agents like digital teammates, with roles, goals, and owners OpenAI

- considering what it would mean to give them employee-like identities in systems like Bob itself

- running them through CI/CD, versioning, and performance reviews

It fits perfectly with the AI Mind framing in the OpenAI story, where GPTs and agents are part of a “shared learning cycle” instead of random side projects.

My takeaways for other data and AI leaders

A few things I think are worth stealing from Reut and the HiBob team:

- Compress the infrastructure transformation.

They did not accept a two-year migration. They picked a six-month window, staffed it, and used AI to make the hard parts tractable. - Go “back to basics” on modeling.

Semantic layers and proper analytical models are not old-fashioned. They are prerequisites if you want reliable agents and “chat with your data” that people trust. - Give the business a constrained playground.

Freedom in a box works: certified data, powerful self-service, plus conversational tools on top. Users feel empowered but cannot accidentally rewrite the company’s metrics. - Make AI everyone’s job, with guardrails.

AI Mind, AI champions, and a structured funnel from idea → GPT → agent → product are what turned 2,500 experiments into a flywheel, not chaos. OpenAI - Treat agents like teammates from day one.

They need owners, training, maintenance, and clear value metrics. Otherwise, they become yet another set of abandoned dashboards. - Remember the slogan: “No AI can fix bad data.”

That is the line Reut put on a slide for her team:

“No AI can fix bad data.”

- In our own work at Solid, we see this daily. You can paper over some issues with clever prompts, RAG, or filters. But if your semantics are broken and your source data is a mess, AI will happily hallucinate its way into bad decisions.

If you’re interested in how we at Solid help teams like Reut’s build semantic layers and AI-ready analytics, you might enjoy:

- Building an AI-powered Intelligent Enterprise (my earlier recap of a conversation with Meenal Iyer of SurveyMonkey)

- Almost no AI in production (on why the gap between demos and reality is still so large)

And of course, if you want to talk about your own data and AI journey, feel free to reach out to us at Solid anytime

Other posts